Ep #42: NGINX Uncovered Part 1: Architecture and Core Building Blocks

From master and worker processes to the event loop, explore the internals that make NGINX one of the fastest and most reliable web servers in the world.

Ep #42: Breaking the complex System Design Components

By Amit Raghuvanshi | The Architect’s Notebook

🗓️ Sep 23, 2025 · Free Post ·

What You’ll Gain from This Article

In this article, you’ll gain a comprehensive understanding of NGINX - from its foundational concepts to the intricate details of its architecture and core components. You’ll learn how NGINX efficiently handles millions of connections through its event-driven design, and explore real-world applications, including how industry leaders like Netflix leverage NGINX to serve vast amounts of content at scale. Whether you’re a developer, system architect, or technology enthusiast, this deep dive will equip you with the insights needed to build scalable, high-performance web infrastructures.

What is NGINX?

NGINX (pronounced "engine-x") is an open-source, high-performance web server, reverse proxy server, load balancer, and HTTP cache. It was initially developed by Igor Sysoev in 2002 to address the C10K problem1 (efficiently handling 10,000 concurrent connections), which was a significant challenge for traditional web servers like Apache2 at the time. Released publicly in 2004, NGINX has become one of the most popular web servers, powering over 400 million websites and applications worldwide, including major platforms like Netflix, Airbnb, and Dropbox.

NGINX is known for its event-driven architecture, low resource consumption, and ability to handle high concurrency with minimal latency. It is highly modular, extensible, and versatile, making it suitable for a wide range of use cases beyond serving static web content.

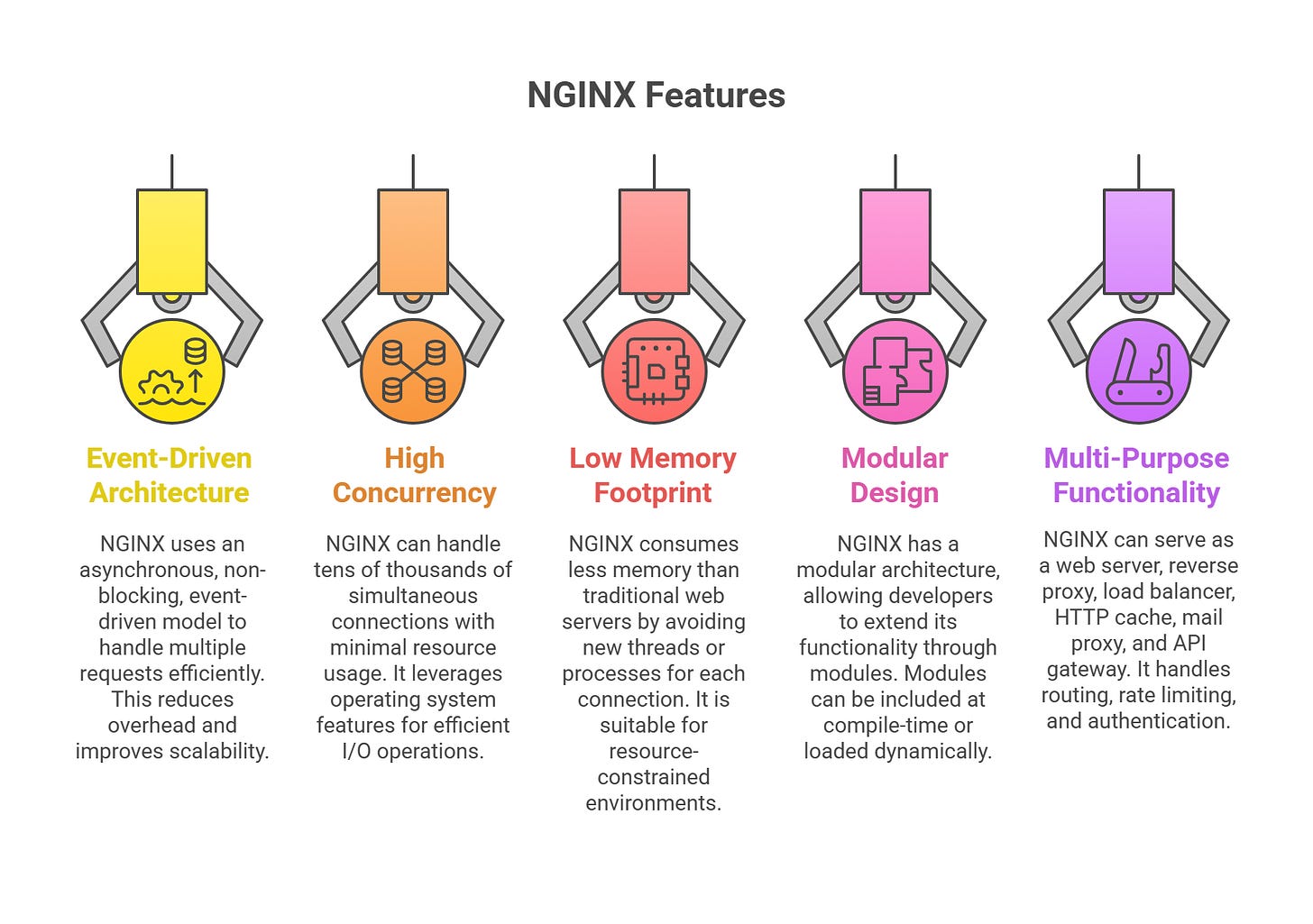

Key Characteristics of NGINX

Event-Driven Architecture:

Unlike traditional web servers (e.g., Apache) that use a process-based or thread-based model (one process or thread per connection), NGINX employs an asynchronous, non-blocking, event-driven model.

A single NGINX process can handle thousands of concurrent connections by multiplexing them using a small number of worker processes3.

Each worker process operates in an event loop, processing multiple requests efficiently without spawning new threads or processes for each connection. This reduces overhead and improves scalability.

High Concurrency:

NGINX is designed to handle tens of thousands of simultaneous connections with minimal resource usage, making it ideal for high-traffic websites and applications.

It achieves this through efficient handling of I/O operations, leveraging operating system features like epoll (Linux) or kqueue (BSD systems).

Low Memory Footprint:

NGINX consumes significantly less memory than traditional web servers because it avoids creating new threads or processes for each connection.

Its lightweight design makes it suitable for resource-constrained environments, such as cloud servers or containerized deployments.

Modular Design:

NGINX is built with a modular architecture, allowing developers to extend its functionality through modules.

Modules can be included at compile-time or, in some cases, loaded dynamically (in NGINX Plus or newer open-source versions).

Examples of modules include support for HTTP/2, WebSocket, compression, authentication, and more.

Multi-Purpose Functionality:

Web Server: Serves static content (e.g., HTML, CSS, JavaScript, images) with high efficiency and can act as a reverse proxy for dynamic content.

Reverse Proxy: Forwards client requests to backend servers (e.g., application servers like Node.js, PHP-FPM, or Python) and returns responses to clients.

Load Balancer: Distributes incoming traffic across multiple backend servers to ensure high availability and scalability.

HTTP Cache: Caches responses to reduce load on backend servers and improve response times for clients.

Mail Proxy: Supports proxying for email protocols (IMAP, POP3, SMTP).

API Gateway: Acts as a single entry point for APIs, handling routing, rate limiting, and authentication.

NGINX Internals and Architecture

NGINX's architecture is designed to handle high concurrency with minimal resource usage, making it a standout choice for modern web servers, reverse proxies, and load balancers. Its internals are built around a master-worker process model4 and an event-driven, asynchronous architecture, which together enable NGINX to efficiently process thousands of simultaneous connections.

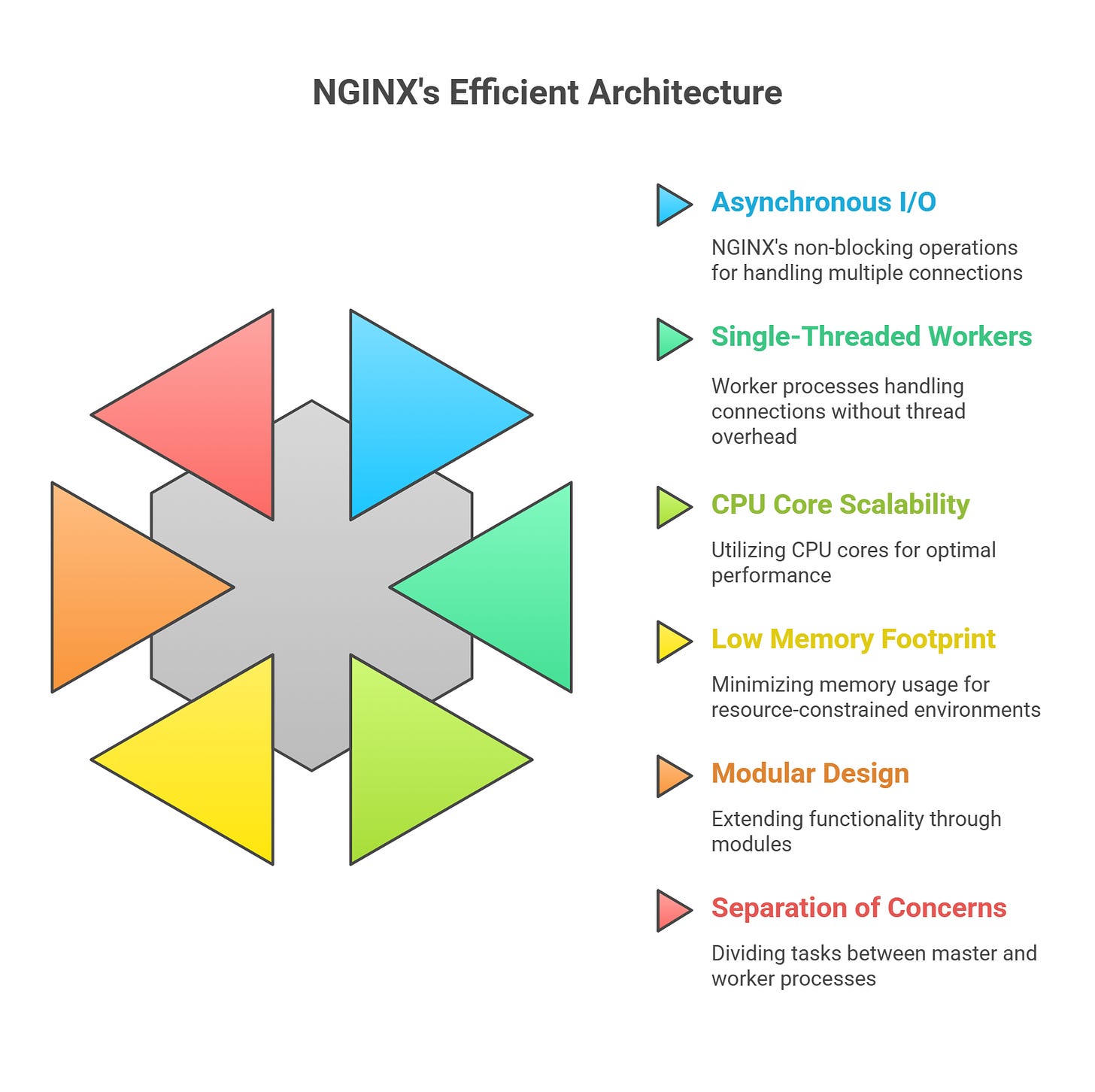

Core Architecture Principles

NGINX's design is rooted in the following principles:

Asynchronous, Non-Blocking I/O:

NGINX uses an event-driven model that avoids blocking operations, allowing it to handle multiple connections within a single thread or process. It follows Single Threaded Model means the Single Thread never releases CPU.

It leverages operating system mechanisms like epoll (Linux), kqueue (FreeBSD/macOS), or IOCP (Windows) to monitor and manage I/O events efficiently.

Single-Threaded Worker Processes:

Each worker process runs a single-threaded event loop, handling multiple client connections concurrently.

This eliminates the overhead of thread creation or context switching, unlike traditional process-per-connection models (e.g., Apache's prefork model).

Scalability Across CPU Cores:

NGINX creates a small number of worker processes, typically equal to the number of CPU cores, to fully utilize hardware resources.

Worker processes share listening sockets, and the operating system kernel handles load balancing between them.

Low Memory Footprint:

By avoiding per-connection processes or threads, NGINX minimizes memory usage, making it suitable for resource-constrained environments.

Modular Design:

NGINX's functionality is extended through modules, which can be included at compile-time or (in newer versions and NGINX Plus) loaded dynamically.

This modularity allows NGINX to support a wide range of protocols and features, from HTTP to WebSocket to TCP/UDP proxying.

Separation of Concerns:

The master process handles administrative tasks (e.g., configuration, process management), while worker processes focus on handling client connections.

This separation ensures stability and efficient resource allocation.

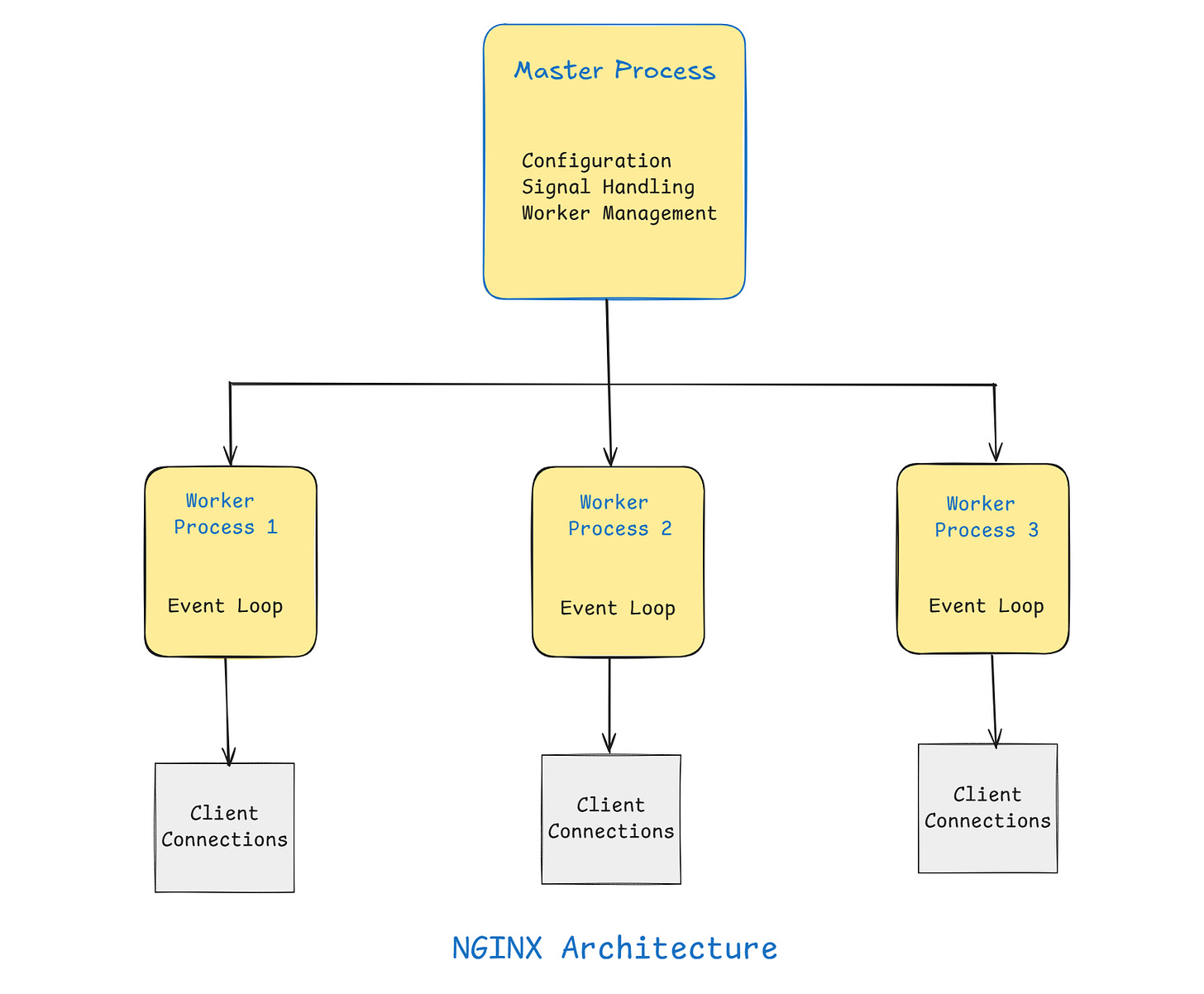

Detailed Breakdown of NGINX Components

1. Master Process

The master process is the central coordinator of NGINX's operations. It runs as a single process with root privileges (necessary for binding to privileged ports like 80 or 443) and performs the following tasks:

Configuration Management:

Reads and validates the NGINX configuration file (typically nginx.conf).

Parses directives and organizes them into a structured format for worker processes to use.

Reloads configuration without downtime using signals like SIGHUP (e.g., nginx -s reload).

Worker Process Supervision:

Spawns and manages worker processes based on the worker_processes directive (often set to auto to match CPU core count).

Monitors worker processes and restarts them if they crash.

Coordinates graceful shutdowns or restarts to avoid interrupting active connections.

Signal Handling:

Responds to operating system signals for tasks like:

Reload: SIGHUP reloads configuration without dropping connections.

Restart: Gracefully restarts worker processes.

Shutdown: SIGTERM or SIGQUIT stops NGINX, either gracefully or immediately.

Example command to reload:

//bash

sudo nginx -s reloadPrivilege Management:

Runs as root to bind to privileged ports (e.g., 80 for HTTP, 443 for HTTPS).

Drops privileges for worker processes by switching to a non-privileged user (e.g., www-data or nginx) for security.

The master process does not handle client connections directly, ensuring it remains lightweight and focused on orchestration.

👉 This post was free, but many of my most detailed breakdowns, case studies, and premium stories are for paid readers only. Upgrading not only gives you access but also supports my work in bringing more high-quality content your way.

2. Worker Processes

Worker processes are responsible for the actual work of handling client connections and processing requests. Key characteristics include:

Non-Privileged Execution:

Worker processes run as a non-privileged user (e.g., nginx or www-data) to minimize security risks.

The master process sets the user via the user directive in the configuration:

//nginx

user nginx;Single-Threaded Event Loop:

Each worker process runs a single-threaded event loop, processing multiple client connections concurrently.

The event loop monitors sockets for events (e.g., new connections, data received) and processes them asynchronously.

Number of Workers:

The number of worker processes is configured via the worker_processes directive, typically set to auto to match the number of CPU cores:

//nginx

worker_processes auto;Each worker can handle thousands of connections, limited by the worker_connections directive:

//nginx

events {

worker_connections 1024;

}Socket Sharing:

All worker processes share the same listening sockets (e.g., port 80 or 443).

The operating system kernel distributes incoming connections across workers using mechanisms like SO_REUSEPORT (Linux) for efficient load balancing.

Request Processing:

Workers handle tasks like:

Accepting client connections.

Reading and parsing HTTP requests.

Serving static files, proxying requests, or load balancing.

Sending responses back to clients.

Workers communicate with upstream servers (e.g., application servers) for reverse proxy scenarios.

If you’re still with me on this NGINX deep dive, let me tell you a quick joke.

Putting twins to bed is like session management.

If I start with one, I better stick to that one until they sleep - otherwise, like a bad load balancer without sticky sessions, I’ll just keep getting redirected back and forth all night.Lesson: Sometimes, “sticky sessions” save your sanity 😆.

3. Event Loop Architecture

The event loop is the core of NGINX’s high-performance design. It enables each worker process to handle thousands of connections efficiently. Here’s how it works:

Asynchronous I/O:

NGINX uses non-blocking I/O operations, meaning it doesn’t wait for one operation to complete before starting another.

For example, when reading data from a client or writing to a backend server, NGINX registers the operation with the operating system and continues processing other tasks.

Event Notification Mechanisms:

NGINX relies on OS-specific mechanisms to monitor events:

Linux: Uses epoll for efficient event handling.

FreeBSD/macOS: Uses kqueue.

Windows: Uses IOCP (I/O Completion Ports).

These mechanisms notify NGINX when a socket is ready for reading, writing, or other actions.

Event Loop Workflow:

The worker process maintains a queue of events (e.g., new connections, data received, or timeouts).

The event loop continuously checks for new events, processes them, and moves on to the next event without blocking.

Example workflow:

Accept a new client connection.

Read the HTTP request headers.

Forward the request to an upstream server (if acting as a reverse proxy).

Send the response back to the client.

Close or keep the connection alive based on the protocol.

Keepalive Connections:

NGINX supports HTTP keepalive, allowing multiple requests to reuse the same connection, reducing overhead.

Example configuration for upstream keepalive:

//nginx

upstream backend {

server backend1.example.com;

keepalive 32;

}Visualizing NGINX Architecture

Master Process:

Runs as a single entity, managing the overall system.

Spawns and supervises worker processes.

Handles signals and configuration updates.

Worker Processes:

Each worker operates independently, handling client connections.

Workers share listening sockets, and the kernel distributes incoming connections.

Each worker runs an event loop to process requests asynchronously.

Conclusion

In this first part, we explored what NGINX is, its core characteristics, and the internal architecture that makes it so efficient. We broke down its components — from the master process that manages lifecycle events to the workers that handle client requests through the powerful event loop model. Together, these design choices explain why NGINX has become the backbone of so many high-performance systems today.

But understanding the architecture is just the foundation. To truly appreciate NGINX, we also need to see how it handles requests in practice.

🔜 Coming Up in Part 2

In the next part, we’ll dive deeper into:

How NGINX processes requests step by step

The phases of the event loop in action

Why the event-driven model is so advantageous

Real-world scenarios where this model shines

This will connect the theory of its internals to the practical performance benefits that make NGINX one of the most trusted web servers and reverse proxies in the world.

The C10K problem was a major challenge in late 1990s networking: optimizing servers and operating systems to efficiently handle ten thousand concurrent client connections. Traditional thread-per-connection models consumed excessive resources, prompting innovation in event-driven, asynchronous I/O architectures for scalable performance.

Apache HTTP Server is a widely used, open-source web server developed by the Apache Software Foundation. It enables delivery of web content via HTTP and HTTPS, supports modular extensions, virtual hosting, dynamic scripting languages, and runs on multiple operating systems, forming the backbone for millions of sites worldwide.

A worker process is a single process dedicated to executing application code and handling specific tasks such as serving web requests or background jobs. In web servers like NGINX or IIS, worker processes manage connections, isolate workloads, and improve scalability by running independently from other server processes, often using event-driven architectures for efficiency.

The master-worker process model is a common server architecture where a master process manages one or more worker processes. The master process handles overall control tasks like reading configuration, managing workers, and handling signals. Worker processes execute the actual workload such as handling client connections and requests independently, using event-driven loops for efficiency. This model improves scalability, fault isolation, and resource management by separating control and execution responsibilities.