Ep #21: Inside DynamoDB — Part 1: Architecture, Evolution & Ecosystem Fit

From its origins at Amazon to powering millions of TPS — what makes DynamoDB a system design essential

Ep #21: Breaking the complex System Design Components - Free Post

Welcome to DynamoDB Week — a special series on Architect’s Notebook that explores how DynamoDB powers some of the most demanding systems on the planet.

This is Part 1 of a multi-part deep dive where we’ll unpack DynamoDB not just as a NoSQL database, but as a critical building block in modern, high-scale architectures.

This Week’s Topic:

“Why DynamoDB Works the Way It Does — The Fundamentals from a System Design Lens”

We’ll explore:

The design motivations behind DynamoDB at Amazon scale

Core building blocks: partitions, keys, consistency, and throughput

Trade-offs made for performance, availability, and scalability

Real-world analogies to make the internals intuitive

How to think about DynamoDB in system design interviews

This isn’t a documentation dump — it’s a guided walkthrough designed to build clarity and real-world intuition.

What is DynamoDB?

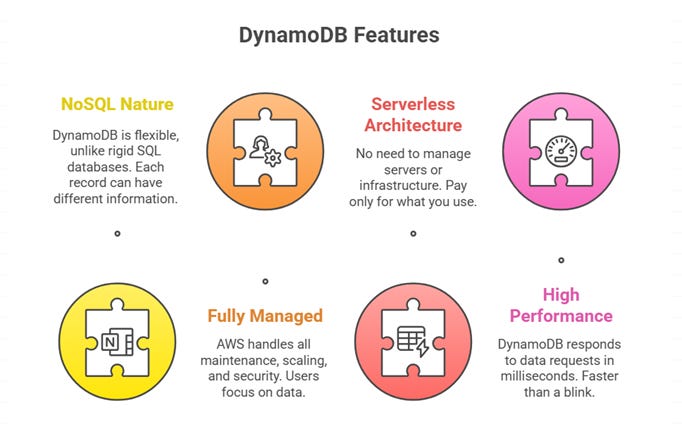

DynamoDB is a fully managed NoSQL database service provided by Amazon Web Services (AWS). It's designed to deliver single-digit millisecond performance at any scale, making it perfect for applications that need to respond quickly to millions of users simultaneously. It is serverless, distributed, and highly available by default, eliminating the need for manual provisioning, patching, or replication.

Key Characteristics That Make DynamoDB Special

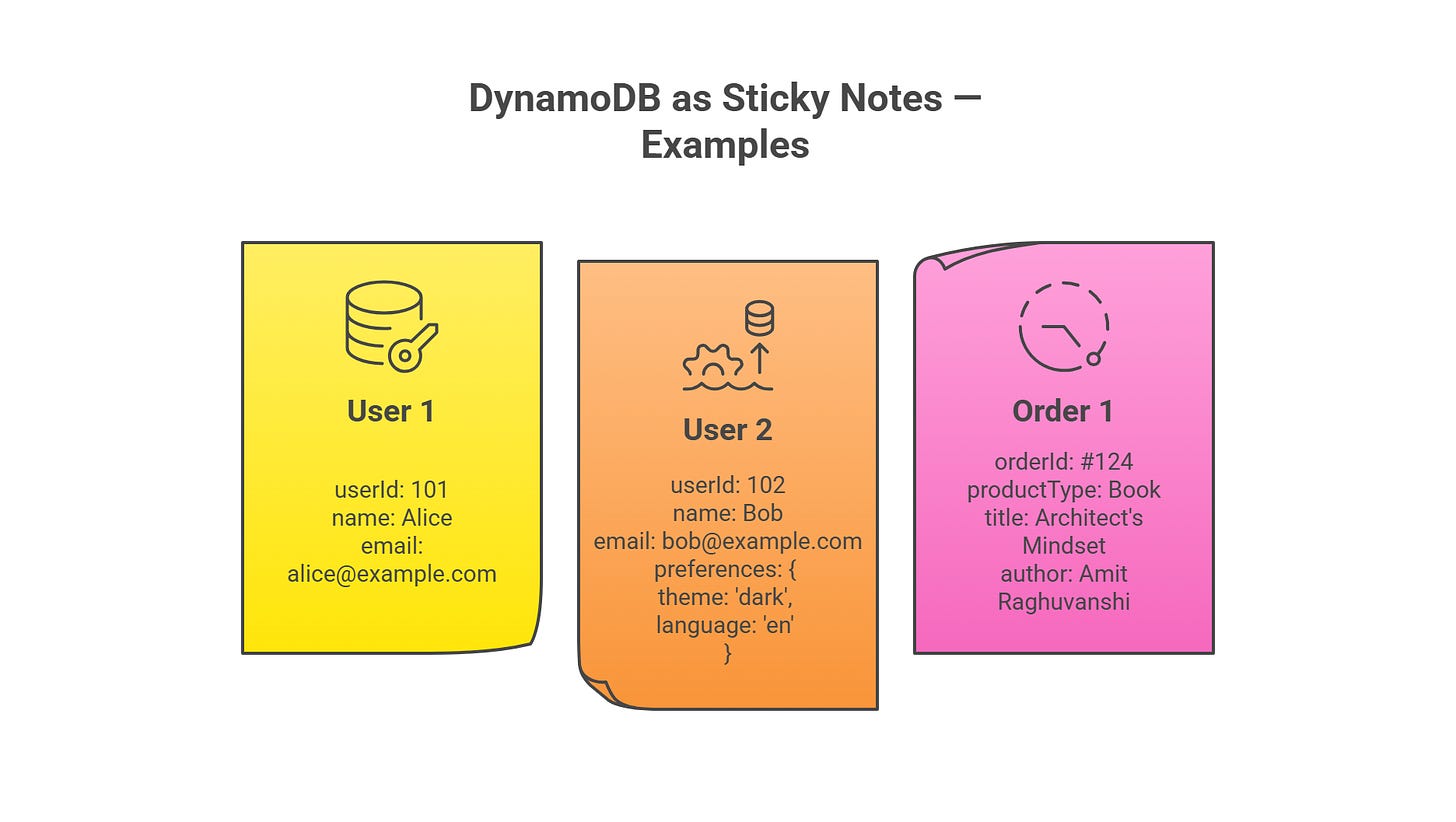

1. NoSQL Nature Think of traditional databases (SQL) like a rigid spreadsheet where every row must have the same columns. DynamoDB is more like a collection of sticky notes - each note (record) can have different information on it, and you don't need to follow a strict format.

2. Fully Managed Imagine having a personal assistant who takes care of all behind-the-scenes work. With DynamoDB, AWS handles:

Hardware maintenance and upgrades

Software patches and updates

Backup and recovery

Scaling up or down based on demand

Security and monitoring

You just focus on storing and retrieving your data.

3. Serverless Architecture You don't need to worry about servers, operating systems, or infrastructure. It's like using electricity - you flip the switch, and it works. You only pay for what you use.

4. High Performance DynamoDB typically responds to data requests in less than 10 milliseconds. To put this in perspective, that's faster than the blink of an eye (which takes about 100-150 milliseconds).

Real-World Analogy

Think of DynamoDB as Amazon's own internal logistics system. Just like Amazon can deliver millions of packages worldwide with incredible speed and reliability, DynamoDB can handle millions of data requests with the same efficiency. In fact, Amazon built DynamoDB to solve their own massive scale challenges.

Brief History and Evolution

The Problem That Started It All (Early 2000s)

In the early 2000s, Amazon was growing rapidly, but their traditional databases couldn't keep up. During peak shopping periods like Black Friday, their systems would slow down or crash. They needed something that could:

Handle massive traffic spikes

Never go down

Scale automatically

Perform consistently

The Birth of Dynamo (2007)

Amazon's engineers created an internal system called "Dynamo" (note: without the "DB"). This wasn't a database in the traditional sense, but a distributed storage system that could handle Amazon's scale. The principles behind Dynamo were revolutionary:

Key Innovation: Distributed Architecture Instead of one giant computer handling all requests, they used many smaller computers working together. If one computer failed, others would continue working seamlessly.

Key Innovation: Eventual Consistency They realized that for many use cases, it's okay if different parts of the system take a moment to sync up, as long as they eventually become consistent. This trade-off allowed for much better performance and availability.

The Public Launch (2012)

After years of internal success, Amazon decided to offer this technology as a service to other companies. They called it DynamoDB and launched it as part of AWS. The key improvements over the internal Dynamo included:

Better consistency options

Simpler API for developers

Integrated monitoring and management tools

Pay-as-you-go pricing model

Major Evolution Milestones

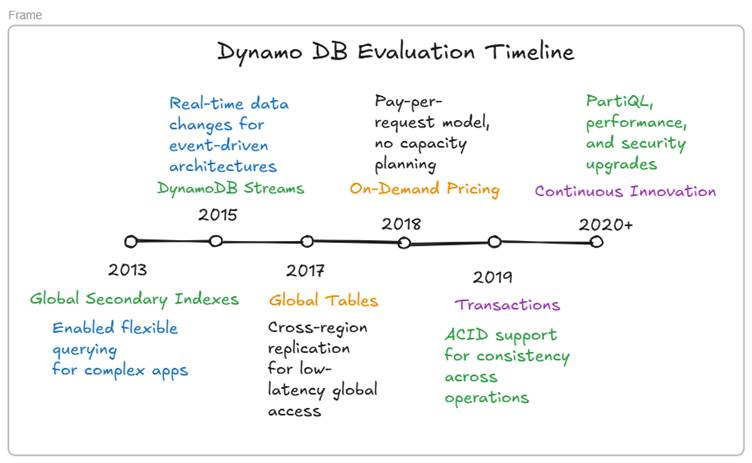

2013: Global Secondary Indexes Added the ability to query data in different ways, making DynamoDB more flexible for complex applications.

2015: DynamoDB Streams Introduced the ability to capture and respond to data changes in real-time, enabling event-driven architectures.

2017: Global Tables Enabled automatic replication across multiple geographic regions, allowing global applications to serve users with low latency worldwide.

2018: On-Demand Pricing Introduced pay-per-request pricing, eliminating the need to predict capacity requirements.

2019: Transactions Added support for ACID transactions, making DynamoDB suitable for applications requiring strong consistency guarantees.

2020-Present: Continuous Innovation Regular improvements in performance, new features like PartiQL (SQL-like query language), and enhanced security features.

Position in AWS Ecosystem

DynamoDB's Role in the AWS Family

Think of AWS as a massive toolkit for building applications in the cloud. DynamoDB serves as the high-performance storage engine in this toolkit. Here's how it fits with other AWS services:

1. AWS Lambda (Serverless Computing) DynamoDB + Lambda = Perfect match for event-driven applications

Lambda functions can trigger when DynamoDB data changes

Both scale automatically based on demand

Both follow pay-per-use pricing

2. Amazon API Gateway Creates a complete serverless backend:

API Gateway handles incoming requests

Lambda processes the business logic

DynamoDB stores and retrieves data

3. Amazon CloudWatch Provides monitoring and alerting:

Tracks DynamoDB performance metrics

Sets up automatic alerts for issues

Helps optimize costs and performance

4. AWS IAM (Identity and Access Management) Provides security:

Controls who can access your DynamoDB tables

Defines what operations users can perform

Integrates with other AWS security services

📖 Excerpt from The Architect’s Mindset – Chapter 4

Becoming a great architect isn't just about knowing patterns or tools — it's about knowing how to learn. The most valuable skill you can cultivate is the ability to teach yourself anything, deeply and efficiently.

The Feynman Technique is one of the simplest yet most powerful methods for mastering new concepts: explain what you're learning as if you're teaching it to a 5-year-old. When you struggle to simplify, it reveals the gaps in your understanding. Fill those gaps, and explain again — learning deepens each time.

Combine that with spaced repetition, a technique rooted in cognitive science. Instead of reviewing something over and over in one sitting, revisit it at gradually increasing intervals. This strengthens memory retention and long-term recall.

And then there’s deliberate practice — not just doing something repeatedly, but pushing the boundaries of your current ability with focused effort and feedback. Great learners don’t avoid discomfort; they seek it, because that’s where growth happens.

These meta-skills don’t just help you learn faster — they help you think deeper, solve harder problems, and build a mindset that compounds over time.

🎁 Get the Book Free (Worth $22)

As a thank-you to all loyal readers:

📚 Yearly subscribers of The Architect’s Notebook who join before September 5th will receive a FREE copy of The Architect’s Mindset - A Psychological Guide to Technical Leadership — a $22 value — at no additional cost.

Already a yearly member? You’re covered too.

New subscribers? Choose the yearly plan before Sep 5 and you’ll get the book automatically.

🛠️ This book distills years of architectural insights into real-world principles, mental models, and stories you can apply to your own career.

If you’ve ever wondered how to think like a system designer, this book was written for you.

Why DynamoDB is a Widely Used Storage Service

Key Advantages and Differentiators

1. Predictable Performance at Any Scale

The Promise: Single-Digit Millisecond Latency Imagine you're at a busy restaurant during peak hours. With traditional databases, as more customers arrive, service gets slower. With DynamoDB, it's like having a magical restaurant where service speed remains constant whether you have 10 customers or 10 million customers.

How It Works:

Consistent Performance: Whether you're handling 100 requests per second or 100 million, response times stay under 10 milliseconds

No Performance Degradation: Unlike traditional databases that slow down as they grow, DynamoDB maintains speed through intelligent partitioning

Predictable Costs: You know exactly what performance you're getting for your money

Real-World Impact: Netflix uses DynamoDB as a key part of its infrastructure to power real-time, low-latency services for over 200 million users. It's used in personalization pipelines, including storing and serving data that helps generate recommendations like "Because you watched...". DynamoDB responds in milliseconds to support this seamless experience at global scale.

2. True Serverless Experience

What Serverless Really Means: Think of it like using a taxi service versus owning a car. With traditional databases (owning a car), you need to:

Buy and maintain the vehicle (servers)

Handle repairs and upgrades

Pay for parking (infrastructure) even when not driving

Predict how many cars you'll need

With DynamoDB (taxi service), you simply request a ride and pay only for the distance traveled.

Key Benefits:

Zero Infrastructure Management: No servers to provision, patch, or maintain

Automatic Scaling: Handles traffic spikes without human intervention

Pay-Per-Use: Only pay for actual read/write requests and storage used

No Downtime for Maintenance: AWS handles all maintenance transparently

3. Built-In Enterprise Features

Security That Works Out of the Box:

Encryption: All data encrypted automatically (both stored data and data in transit)

Access Control: Fine-grained permissions using AWS IAM

VPC Integration: Keep your data completely private within your AWS network

Compliance: Meets strict standards like HIPAA, SOC, and PCI DSS

Reliability You Can Trust:

99.99% Availability SLA: AWS guarantees your database will be available 99.99% of the time i.e. around 52 minutes of expected downtime per year.

Multi-AZ Deployment: Your data is automatically replicated across multiple data centers

Point-in-Time Recovery: Can restore your database to any second within the last 35 days

Automated Backups: Daily backups with no performance impact

4. Developer-Friendly Features

Simple but Powerful API: Instead of learning complex SQL syntax, developers can use simple commands:

// Get an item

GetItem(TableName, Key)

// Put an item

PutItem(TableName, Item)

// Query items

Query(TableName, KeyCondition)Multiple Programming Language Support:

SDKs available for Java, Python, JavaScript, .NET, PHP, Ruby, Go, and more

Same API works across all languages

Extensive documentation and community support

5. Global Scale Capabilities

Global Tables - Multi-Region Magic: Imagine having identical stores in New York, London, and Tokyo that automatically sync their inventory in real-time. That's what Global Tables do for your data.

Benefits:

Local Performance Everywhere: Users in Asia access Asian data centers, Europeans access European centers

Disaster Recovery: If one region goes down, others continue serving traffic

Compliance: Keep data in specific geographic regions to meet local regulations

Notable Customer Success Stories

Gaming Industry:

Supercell (Clash of Clans): Handles millions of concurrent players

Zynga: Powers real-time gaming experiences for hundreds of millions of users

Epic Games (Fortnite): Manages player data and matchmaking for 400+ million users

E-commerce and Retail:

Airbnb: Manages booking and user data for millions of properties

Lyft: Handles ride matching and driver location tracking

Samsung: Powers SmartTV applications and user experiences

Media and Entertainment:

Netflix: Recommendation engine and user viewing data

Disney+: Streaming service backend and content delivery

Snapchat: Real-time messaging and multimedia content storage

Financial Services:

Capital One: Credit card transaction processing and fraud detection

Robinhood: Real-time trading platform backend

Coinbase: Cryptocurrency trading and wallet management

Conclusion: Setting the Foundation

DynamoDB isn’t just another NoSQL database — it’s a battle-tested, cloud-native data engine built for scale, simplicity, and resilience. In this first part, we explored its origins, why it matters in the AWS ecosystem, and what makes it a go-to choice for high-performance systems.

From Netflix to Amazon itself, DynamoDB continues to power mission-critical workloads at massive scale — and understanding its fundamentals is the first step toward designing systems that are truly cloud-native.

But this is just the beginning.

🔒 Up Next: Part 2 – Should You Choose DynamoDB?

In this Premium-only post (coming Friday), we’ll cover:

🔄 DynamoDB vs MongoDB, Redis, Cassandra & Firehose

🧠 When to choose DynamoDB (and when not to)

⚙️ Real-world examples where DynamoDB shines — from IoT to serverless SaaS

If you’ve enjoyed Part 1 and want to keep building momentum, now’s the perfect time to become a Premium member.

This is where the real architectural decision-making begins.

Don’t miss it. 👇

Stay tuned for the next post in The Architect’s Notebook series.

– Amit Raghuvanshi

Author, The Architect’s Notebook

Stay tuned 👀