Cracking Consistent Hashing: Why It Matters in Distributed Systems

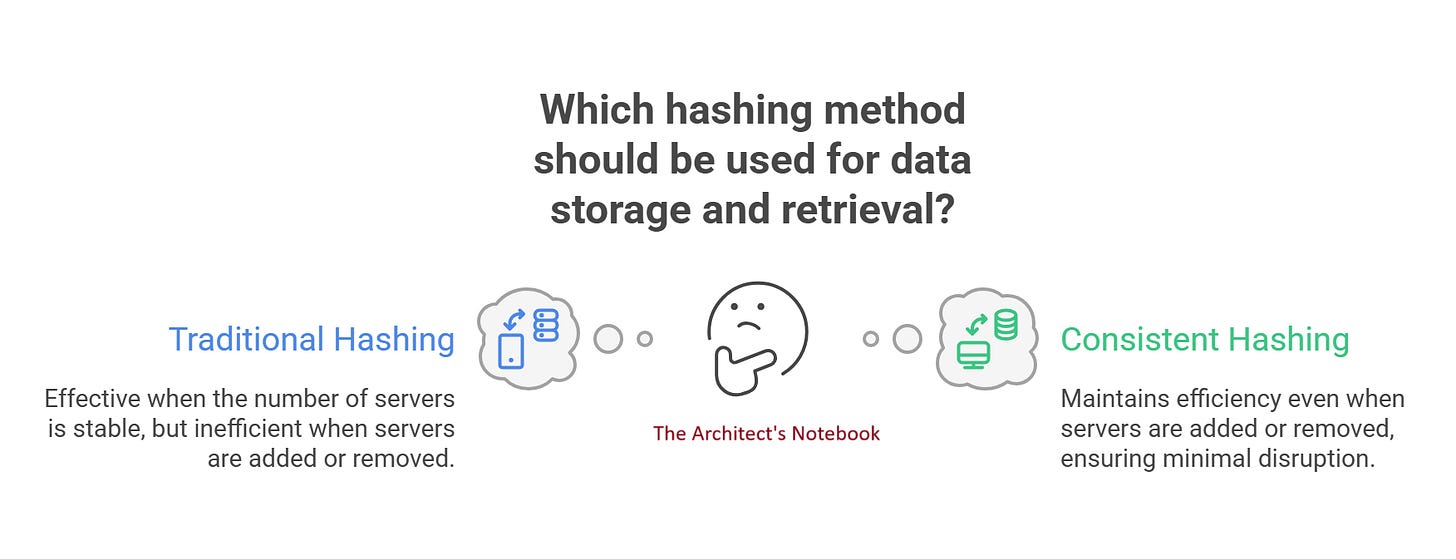

Discover the problems with traditional hashing and the promise of a better solution.

Ep #15: Breaking the complex System Design Components

In the world of distributed systems, ensuring that data and traffic are evenly distributed across multiple nodes is a critical challenge. Whether it's a distributed database, caching system, or a load balancer, the goal is to achieve scalability, fault tolerance, and efficient resource utilization. One powerful technique that helps achieve these goals is consistent hashing. But before we get into it, let’s understand the problem it solves.

What is Hashing?

Before diving into consistent hashing, let’s start with the basics. Hashing is a process that takes an input—like a piece of data or a request—and turns it into a fixed-size number called a hash value, hash code, or digest. Hashing is widely used in data structures (e.g., hash tables), cryptography, and data integrity verification.

A hash function is a mathematical function that takes an input (or "key") and generates a fixed-length string of characters, which is typically a number or a sequence of bits. The output is deterministic, meaning the same input always produces the same hash value. Hash functions are designed to be fast and efficient, enabling quick data access or comparison.

Hashing is generally used in data storage and retrieval. The hash value is used to map the input to a specific location, such as a server in a distributed system.

For example, imagine you have three servers, and you use a simple hash function to decide which server handles a request. You might calculate hash(request) % 3, where the result (0, 1, or 2) tells you which server to use. This method, called traditional hashing, works well when the number of servers doesn’t change. But what happens when you add or remove a server? That’s where the trouble begins.

hash("user123") % 3 → returns 0, 1, or 2 to assign to a server.The Problem with Traditional Hashing

In traditional hashing, if the number of servers changes, the mapping of data or requests to servers shifts dramatically. For example, if you add a fourth server, the formula changes to hash(request) % 4. Suddenly, almost all requests get reassigned to different servers. This causes several problems:

Massive Data Movement: In a distributed database, you’d need to move most of the data to new servers, which is slow and resource-heavy.

Disruption: In a caching system, this remapping invalidates cached data, causing a spike in cache misses and slowing things down.

Inefficiency: Constantly reorganizing data or requests when scaling up or down wastes time and can even lead to downtime.

Clearly, traditional hashing doesn’t handle change well. That’s why consistent hashing was invented.

Why Do We Need Consistent Hashing?

In a distributed system, data or requests need to be distributed across multiple nodes to ensure:

Load Balancing: No single node becomes a bottleneck by handling too much data or traffic.

Scalability: The system can handle more data or traffic by adding new nodes.

Fault Tolerance: If a node fails, the system can continue functioning with minimal disruption.

Minimal Reassignment: When nodes are added or removed, only a small portion of the data or traffic needs to be reassigned.

Traditional hashing methods (e.g., modulo hashing) don’t handle these requirements well. For example, in modulo hashing, data is assigned to a node using a formula like hash(key) % N, where N is the number of nodes. If N changes (e.g., a node is added or removed), almost all data needs to be remapped, causing significant overhead and disruption.

Consistent hashing solves this problem by providing a more flexible and stable way to map data to nodes.

❤️ Support this work:

If you’ve found value in these deep dives and want to keep them coming, consider pledging your subscription. Your support helps fuel future posts and keeps this space alive for thoughtful, technical storytelling.

What is Consistent Hashing?

Consistent hashing is a distributed hashing technique designed to map data (or requests) to nodes in a system in a way that minimizes disruption when nodes are added or removed. Unlike traditional hashing, which can cause significant remapping of data when the system changes, consistent hashing ensures that most data remains mapped to the same nodes, making it ideal for dynamic, scalable systems.

Imagine you’re running a distributed caching system like Memcached or a distributed database like Cassandra. You have multiple servers (nodes), and you need to decide which server should store or handle a particular piece of data (e.g., a user’s profile or a web request). Consistent hashing provides a way to assign data to nodes efficiently and consistently, even as the system grows or shrinks.

The trick? Consistent hashing maps both nodes (servers) and data (or requests) onto a circular space called a hash ring. Then, it assigns each piece of data to the “nearest” node on the ring. Let’s see how this works step by step.

FAQs related to Hashing

Q: What’s the difference between consistent hashing and traditional hashing?

A: Traditional hashing (e.g., modulo hashing) maps data to nodes using a fixed formula like hash(key) % N. If the number of nodes (N) changes, most data needs to be remapped. Consistent hashing uses a hash ring to map data to nodes, ensuring that only a small portion of data is affected when nodes are added or removed.

Q: Can consistent hashing guarantee perfect load balancing?

A: No, but it significantly improves load balancing, especially with virtual nodes. The quality of the hash function and the number of virtual nodes influence how evenly data is distributed.

Q: Where is consistent hashing used in real-world systems?

A: Consistent hashing is used in distributed databases (e.g., Cassandra, DynamoDB), caching systems (e.g., Memcached, Redis), load balancers, and CDNs (e.g., Akamai).

Q: Why does even distribution matter?

A: It prevents any one node from being overloaded, improving performance and reliability across the system.

🕰️ What’s Next in Part 2?

In Part 2, we’ll walk through how consistent hashing works step-by-step, explore the concept of virtual nodes, and look at real-world use cases.

Still with me?

If you’ve made it this far and are reading this line, thank you — it genuinely means a lot. 🙌

If this post resonated with you, please like it or leave a comment — it helps me know you're reading and that these words are making an impact.

I’d love to hear your thoughts:

What did you take away from this?

What topics would you like me to cover next?

Are there areas you'd like me to go deeper into?

Your feedback shapes what comes next, so don’t be shy — hit reply, drop a comment, or share it with someone who might find it useful.

Let’s keep learning and building, together. 🚀

Stay tuned 👀

It was really a nice series of writeups to start System Design. Thank you very much and appreciate your efforts.

Initially, when I started with system design learning, google search returned lot of bits and pieces. I was overwhelmed by the amount of details and different links which I had to go through - thought of quitting at one point.

Then I came through your writings - neat and precise, arranged progressively to help a new learner and everyone else too. Thanks again for these simple and yet effective writeups.

Keep up the good work.